by Jeff Wexler CAS and Donavan Dear CAS

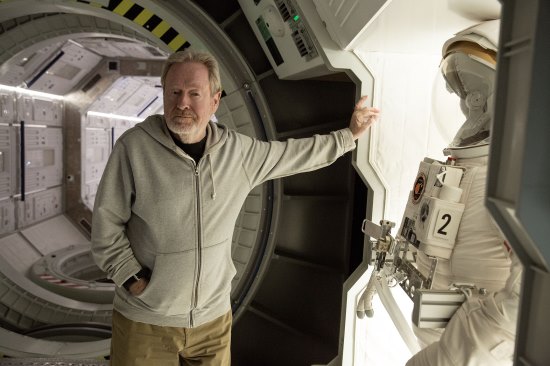

Jeff Wexler: When the call came in for Roadies, I knew I had to do it. I was not available to work on the pilot, which they shot in Vancouver, Canada, as I was on a feature. Showtime gave it the green light for a full season and though I was pretty much semi-retired, I really wanted to do the show. Don Coufal and I have done six movies with Cameron Crowe and Roadies would be Cameron’s and my first television episodic. I was a little worried since I had not done any episodic television and heard all the horror stories. But there was no need to worry, Cameron had not developed any of those awful habits, and shooting the first two episodes with Cameron directing was wonderful— just like working on any of the movies with him. It was a bit of an adjustment for me to be doing nine pages a day instead of the one and a half I was used to.

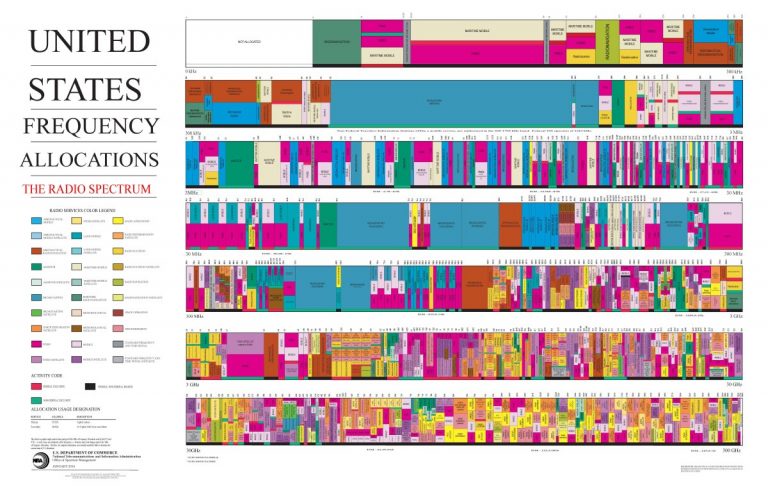

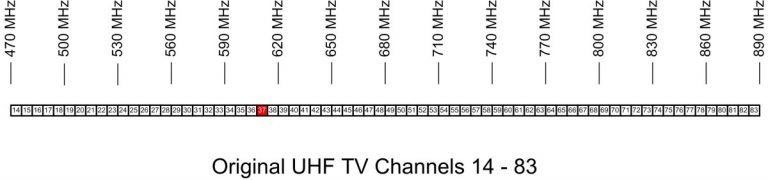

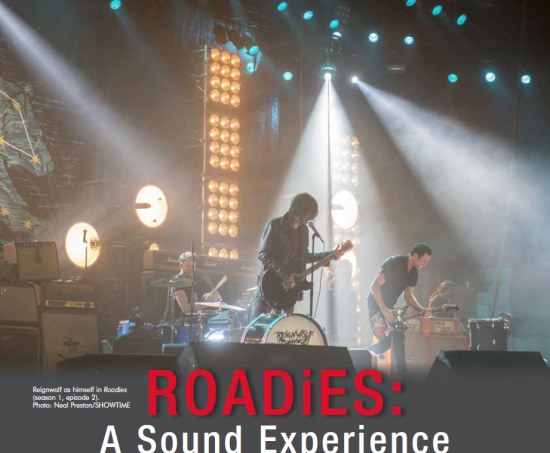

Each episode was to have one or more music scenes and in preproduction, we had lots of discussions about how to do these things—shoot to prerecorded playback tracks, shoot to playback but live vocals or do it all live. Many of the scenes took the form of impromptu songs performed in dressing rooms, hotel rooms, rehearsals, music and dialog, starting and stopping; the sorts of scenes that are best done live. The final decision was to do all the music live record.

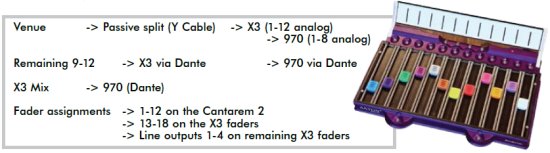

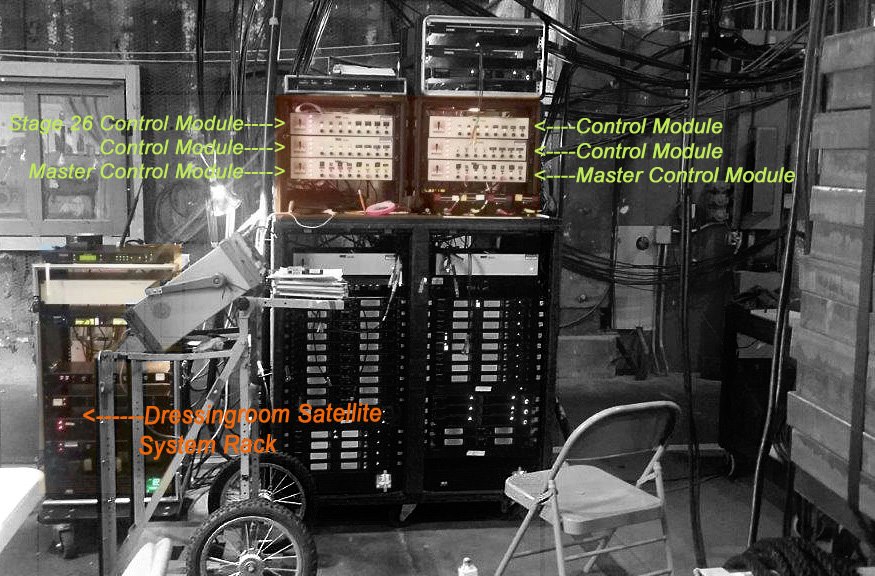

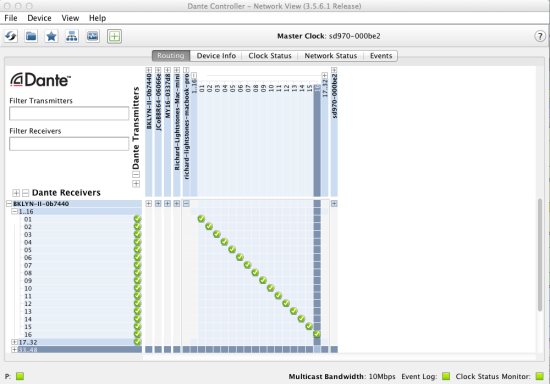

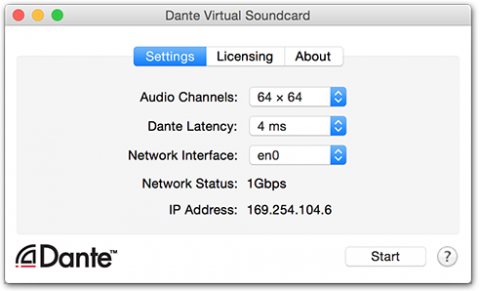

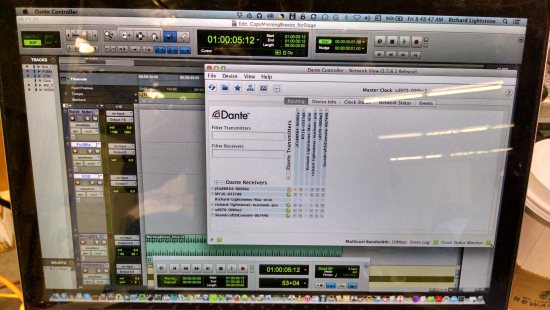

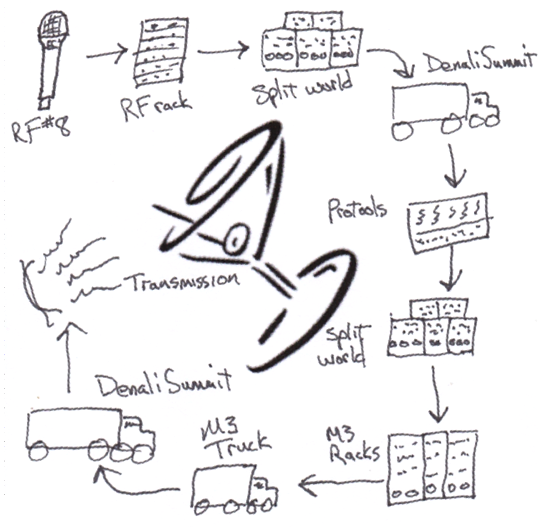

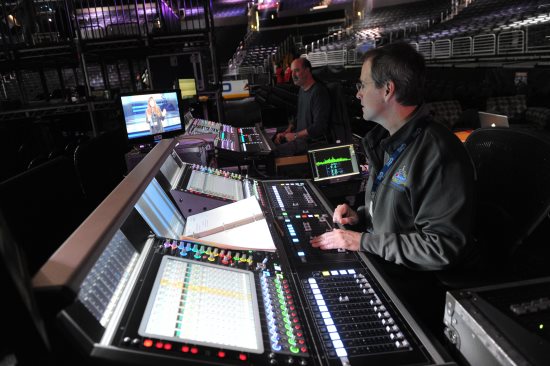

I have done lots of music in movies, playback, live recording, concert recordings with remote truck and so forth, and I already knew that the Production Sound Mixer needs to have help doing all of this, whether it is as simple as hiring a Playback Operator or as complex as interfacing with a remote truck for a full on concert recording. I requested that Production hire Gary Raymond and an assistant for any of the live record. We added Bill Lanham to Gary’s crew, a veteran concert engineer who proved to be a vital part of the music crew. Gary was set up to record directly into Pro Tools with all sources in use for the scene. Some of the performances were fairly simple, one person, solo guitar, but others were quite a bit more complex, full on concert setups. I was so pleased to be able to record Lindsey Buckingham singing “Bleed to Love Her,” just Lindsey and his amazing guitar playing, recorded with just one Schoeps overhead. Like so many of the things we have done together, all the “mixing” of this beautiful sound was done by Don Coufal with his fishpole.

It was always the plan that I would do the first two episodes that Cameron was directing— I was really not up to doing the full season so I asked Donavan Dear to come in and replace me. Donavan was so pleased to come onto what turned out to be one of the best TV experiences ever. Don Coufal stayed on the job which helped immensely in terms of preserving continuity on the show, and Donavan was pleased of course, for the chance to work with Don. After the first two episodes, new directors were brought in as is usually the case with episodic, but Cameron was there most days and directed the last episode.

I’m just so pleased that I got to do the two episodes, and be able to work with Cameron Crowe again.

Donavan Dear: When Jeff Wexler asked me to take over for him on Roadies, I said I’d love to do it. A few weeks later, Jeff introduced me to Cameron Crowe. Cameron took a lot of time talking and getting to know me. I’ve done many television shows and never seen a director actually take more than a minute to meet the new Sound Mixer. We talked about our love of music and how it could be used to mold the performance of the actors. This was the first clue Roadies was going to be different. Taking over for Jeff Wexler was very flattering and getting to use Don Coufal on the boom was also something I was really looking forward to.

Roadies Was Different

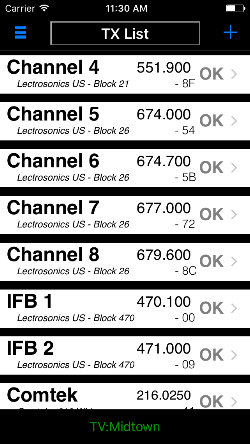

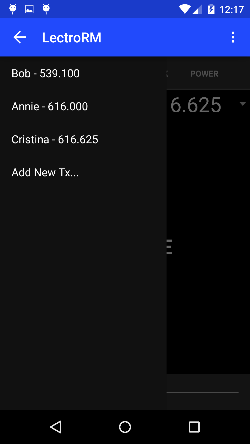

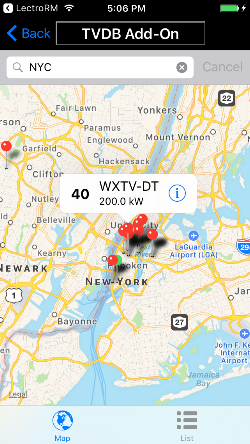

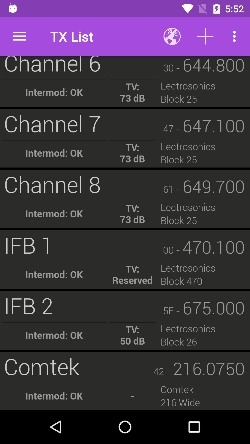

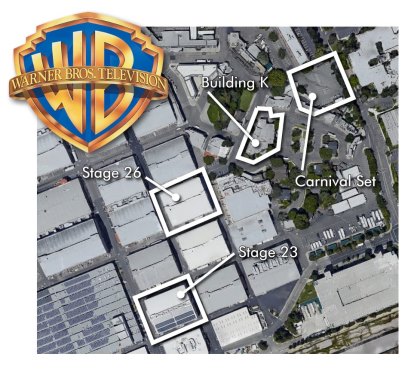

Roadies was different; from the start, it was essentially a very long feature about music and the people who made it happen. Cameron had decided that he wanted live music performances, which not only meant the performers would perform live, but the sound system would be real from the arena speakers to the concert desk, monitors and amps. Jeff Wexler smartly decided the PA system should be managed and set up by concert-sound experts, so he hired Gary (Raymond) and Bill (Lanham), who set up the entire PA systems a day or two before each performance. I would simply take a stereo left/rght mix directly out their console; the loudness of the speakers was always set to not interfere with the multitrack recording.

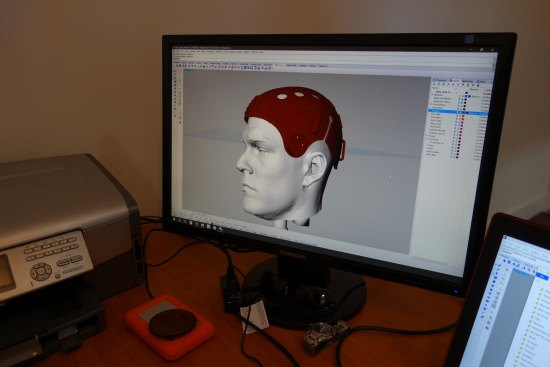

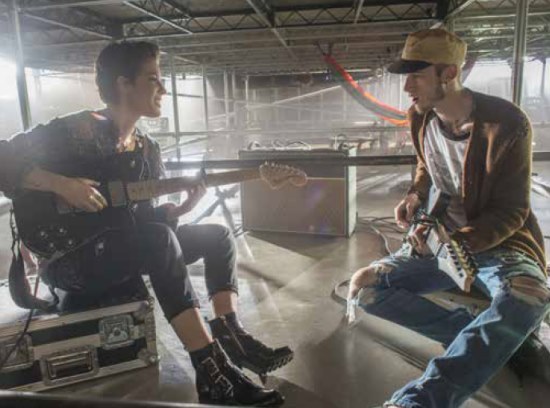

One of the other interesting facets of Roadies was the live recordings that weren’t stage performances. There were usually a couple in each show, the artist would start “noodling” on a guitar throughout the scene with one of the roadies, or they would just be singing a song trying to tell a story. This was a lot of fun. We recorded Halsey with one of the roadies, Machine Gun Kelly (aka Colson Baker) playing and singing with two electric guitars beneath the stage. What was most challenging was to get a consistent mix with multiple cameras and different angles in such a poor acoustic environment. This is where Don’s listening was so important. It’s simple to play back a prerecorded track and have the actors lip-sync, or even to live record the first take of a performance, then play back that recording to keep things consistent in future takes. We recorded every shot and every angle live. When the cameras would turn around and change the position of the actors and amplifiers, it changed the properties of the sound. The actors usually could not sing and play the music the same way from take to take. This is why it’s so important for the Boom Operator to listen. There is no formula for positioning a microphone and capturing the same musical tonality, there is only your memory of how the last setup sounded and how to place a microphone for the best sound and consistency. Don Coufal and the editors did an outstanding job in preserving great live performances. More often than not, our biggest problem was the balance between the louder acoustic guitars and soft singing voices—often nudged by Don to give us a little more voice.

Boom Philosophy

There are two kinds of Boom Operators: ‘hard cuers’ and ‘floaters.’ Don Coufal and I are on opposite sides of these philosophies, but I had so much respect and trust of Don that I let him do what he does best. My regular boom operators are always aggressive and cue very hard while getting the mic as close to the frame line as possible, while Don concentrates on listening very diligently to the background ambience and cueing to the voice, creating a smooth, consistent background. Don Coufal is probably the only boom operator I know whom I would trust to use his method.

Don and I had some great conversations about microphones and technique, but when we talked about microphones, acoustics or the tone of a particular actor’s voice, I could see the excitement in his eyes. I knew he was someone I could trust completely. A sound man needs to be excited about equipment, about learning and about ways to approach an actor with a sound problem in a way that will make the actor feel comfortable to accommodate that request.

Don made a believer out of me. Boom Operators have to learn every line in the script and point the mic at the actor’s sweet spot no matter what technique they use. There is a movie/TV difference; in general, a sound crew on a feature has more opportunities to quiet the set whereas a TV crew often doesn’t have time to put out all the ‘noise fires.’

When it comes down to it, the floating style cuts nicely with a bit more background noise, where a hard-cue technique has more proximity effect and less background noise but with a more inconsistent background ambience. All in all, the most important things about boom technique are listening and experience. Don Coufal excels in both of those.

Cameron Crowe: Roadies was very special because of Cameron Crowe, and music is very special to him. There were times during a take that an AD would run over to me and say Cameron wants you to play one of these four songs between the lines or in that moment, at the end of the shot. We always had a playback speaker ready, several music apps and 150,000 of my own songs ready to go at all times. Cameron has his own playback/computer desk that Jeff built for him so he could play music and set the tone for an actor’s performance or set a mood for the crew before a scene. Cameron uniquely communicated with music, he wasn’t a very technical director but he did have an amazing way of tuning and changing a performance with his choice of music. The goal of Roadies was to move people with great music and sound. I was so happy to be a part of such a special show.